One of my favourite new iOS 9 features released at this year's WWDC is the new Metal Performance Shaders (MPS) framework. MPS were explained by Anna Tikhonova in the second half of What's New in Metal, Part 2, in a nutshell, they are a framework of highly optimised data parallel algorithms for common image processing tasks such as convolution (e.g. blurs and edge detection), resampling (e.g. scaling) and morphology (e.g. dilate and erode). The feature set isn't a million miles away from Accelerate's vImage filters, but running on the GPU rather than the CPU's vector processor.

Apple have gone to extraordinary lengths to get the best performance from the filters. For example, there are several different ways to implement a Gaussian blur each with different start-up costs and overheads and each may give better performance depending on the input image, parameters, thread configuration, etc.

To cater for this, the MPSImageGaussianBlur filter actually contains 821 different Gaussian blur implementations and MPS selects the best one for the current state of the shader and the environment. Whereas a naive implementation of a Gaussian blur may struggle along at 3fps with a 20 pixel sigma, the MPS version can easily manage 60fps with a 60 pixel sigma and, in my experiments, is as fast or sometime fasters than simpler blurs such as box or tent.

MPS are really easy to implement in your own code. Let's say we want to add a Gaussian blur to an MTKView. First off, we need our device and, with Swift 2.0, we can use the guard statement:

guardlet device = MTLCreateSystemDefaultDevice() else

{

return

}

self.device = device

Then we instantiate a MPS Gaussian blur:

let blur = MPSImageGaussianBlur(device: device, sigma: 3)

Finally, after ending the encoding of an existing command encoder, but before presenting our drawable, we insert the blur's encodeToCommandBuffer() method:

commandEncoder.setTexture(texture, atIndex: 0);

commandEncoder.dispatchThreadgroups(threadgroupsPerGrid, threadsPerThreadgroup: threadsPerThreadgroup)

commandEncoder.endEncoding()

blur.encodeToCommandBuffer(commandBuffer, sourceTexture: texture destinationTexture: drawable.texture)

commandBuffer.presentDrawable(drawable)

commandBuffer.commit()

...and, voila, a beautifully blurred image.

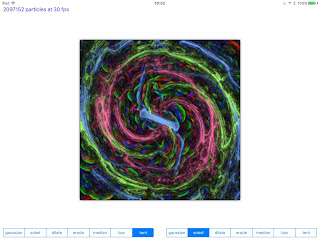

I've created a branch from my MetalKit Particles project to demonstrate MPS (sadly, at Beta 2, MPS is only supported under iOS. Hopefully OS X is coming soon). This demo contains a particle system to which I apply two filters and present the user with a pair of segmented controls so they can select from a list of seven filter options:

All the MPS filters extend MPSUnaryImageKernel which contains encodeToCommandBuffer() so I have an array of type [MPSUnaryImageKernel] which includes my seven filters:

let blur = MPSImageGaussianBlur(device: device, sigma: 3)

let sobel = MPSImageSobel(device: device)

let dilate = MPSImageAreaMax(device: device, kernelWidth: 5, kernelHeight: 5)

let erode = MPSImageAreaMin(device: device, kernelWidth: 5, kernelHeight: 5)

let median = MPSImageMedian(device: device, kernelDiameter: 3)

let box = MPSImageBox(device: device, kernelWidth: 9, kernelHeight: 9)

let tent = MPSImageTent(device: device, kernelWidth: 9, kernelHeight: 9)

filters = [blur, sobel, dilate, erode, median, box, tent]

I've extended my ParticleLab component to accept a tuple of two integers that defines which filters to use:

var filterIndexes: (one: Int, two: Int) = (0, 3)

...and apply the MPS kernels base on those filter indexes:

filters[filterIndexes.one].encodeToCommandBuffer(commandBuffer, sourceTexture: texture, destinationTexture: drawable.texture)

filters[filterIndexes.two].encodeToCommandBuffer(commandBuffer, sourceTexture: drawable.texture, destinationTexture: texture)

The final results can be quite pretty: here's an "ink in milk drop" style effect created with a Gaussian blur and dilate filter:

This code is available at my GitHub repo here. This code requires Xcode 7 (Beta 2) and an A8 or A8x device running iOS 8 (Beta 2). Enjoy!