Following on from my recent post on Shinpuru Image, my Swift syntactic sugar for Core Image and vImage filters, here's a new addition: SIHistogramCalculation().

SIHistogramCalculation() returns a tuple containing four arrays of 256 unsigned integers which can be used to create an image histogram representing the tonal distribution of an image. It's based on the vImage vImageHistogramCalculation_ARGB8888() function and is implemented as easily as:

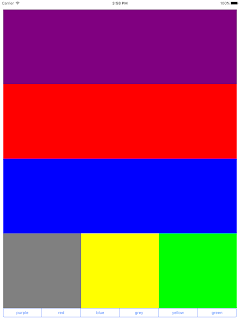

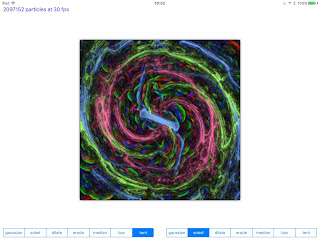

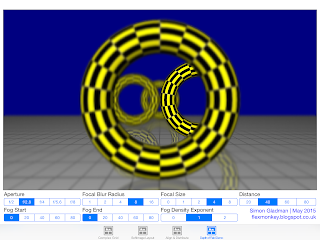

I've created a demonstration class, Histogram, which uses Daniel Cohen Gindi's ios-charts project to display a nice looking histogram chart. The demo uses three filters, SIWhitePointAdjust(), SIColorControls() and SIGammaAdjust(), which are chained together with the output image populating a UIImageView and acting as the source to a SIHistogramCalculator():

I then iterate over the histogram object building three arrays of ChartDataEntry:

...which in turn are used to create LineChartDataSet instances which are passed to the LineChartView's data:

...and that's all there is to the implementation! All the boiler plate code of wrestling with vImage's strange syntax and wrestling with UnsafeMutablePointers is hidden away inside Shinpuru Image, leaving you to create beautiful histograms!

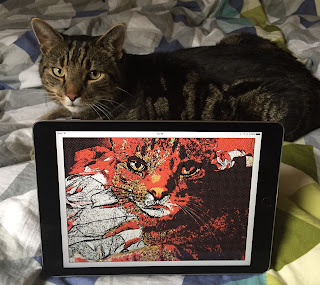

One caveat - these filters are horribly slow in the iOS simulator, but this demo runs beautifully smoothly on my iPad Air 2. My plan is to add easy asynchronous support to Shinpuru Image to make running filters in the background really easy to add.

Shinpuru Image is open source and the project lives in my GitHub repository here.

SIHistogramCalculation() returns a tuple containing four arrays of 256 unsigned integers which can be used to create an image histogram representing the tonal distribution of an image. It's based on the vImage vImageHistogramCalculation_ARGB8888() function and is implemented as easily as:

let histogram = UIImage(named: "glass.jpg")?.SIHistogramCalculation()

I've created a demonstration class, Histogram, which uses Daniel Cohen Gindi's ios-charts project to display a nice looking histogram chart. The demo uses three filters, SIWhitePointAdjust(), SIColorControls() and SIGammaAdjust(), which are chained together with the output image populating a UIImageView and acting as the source to a SIHistogramCalculator():

let targetColor = UIColor(red: CGFloat(redSlider.value),

green: CGFloat(greenSlider.value),

blue: CGFloat(blueSlider.value),

alpha: CGFloat(1.0))

let image = UIImage(named: "glass.jpg")?

.SIWhitePointAdjust(color: targetColor)

.SIColorControls(saturation: saturationSlider.value, brightness: brightnessSlider.value, contrast: contrastSlider.value)

.SIGammaAdjust(power: gammaSlider.value)

let histogram = image?.SIHistogramCalculation()

imageView.image = image

I then iterate over the histogram object building three arrays of ChartDataEntry:

var redChartData = [ChartDataEntry](count: 256, repeatedValue: ChartDataEntry())

var greenChartData = [ChartDataEntry](count: 256, repeatedValue: ChartDataEntry())

var blueChartData = [ChartDataEntry](count: 256, repeatedValue: ChartDataEntry())

for i: Intin0 ... 255

{

redChartData[i] = ( ChartDataEntry(value: Float(histogram!.red[i]), xIndex: i) )

greenChartData[i] = ( ChartDataEntry(value: Float(histogram!.green[i]), xIndex: i) )

blueChartData[i] = ( ChartDataEntry(value: Float(histogram!.blue[i]), xIndex: i) )

}

...which in turn are used to create LineChartDataSet instances which are passed to the LineChartView's data:

let redChartDataSet = LineChartDataSet(yVals: redChartData, label: "red")

let greenChartDataSet = LineChartDataSet(yVals: greenChartData, label: "green")

let blueChartDataSet = LineChartDataSet(yVals: blueChartData, label: "blue")

let lineChartData = LineChartData(xVals: foo, dataSets: [redChartDataSet, greenChartDataSet, blueChartDataSet])

chart.data = lineChartData

...and that's all there is to the implementation! All the boiler plate code of wrestling with vImage's strange syntax and wrestling with UnsafeMutablePointers is hidden away inside Shinpuru Image, leaving you to create beautiful histograms!

One caveat - these filters are horribly slow in the iOS simulator, but this demo runs beautifully smoothly on my iPad Air 2. My plan is to add easy asynchronous support to Shinpuru Image to make running filters in the background really easy to add.

Shinpuru Image is open source and the project lives in my GitHub repository here.